Walking Eye, Hank! They’re all the same.

[youtube https://www.youtube.com/watch?v=f77gw2Pp3aY&w=530]

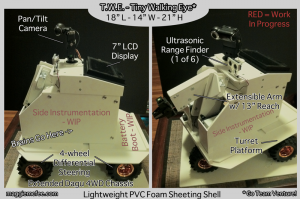

I’ve been working on a robot for a while. Well, a specific robot. I’ve tinkered with some others prior to and along the way. But for this particular bot, I started fiddling with servos and controllers for an arm last winter and since then I’ve bought a bunch of micro-controllers and itsy bitsy computers to fiddle with too. The results of all that fiddling have been sort of percolating in my head and has recently, in long bursts of work, been spat out into this, the Tiny Walking Eye. I never intended to do a pre-design, per se, and I’ve let all the ideas sort of clump together so that I knew roughly what I wanted to build, just not exactly how I’d build it. I built an eye. Then an arm. Then… I built all that you see below in a couple of long, late nights. Given that I ‘made it up as I went’, I’m fairly pleased with the aesthetics of, so far, it as well. Nobody wants an ugly robot.

OK, it’s not actually tiny, it’s about 22 inches high at the top of the video camera. And it doesn’t walk, it has 4 drive wheels in a differential (‘tank’) steering configuration. And the eye is a camera. But my friend Christopher (who I also do a podcast with) and I were making Venture Brothers jokes and I got fixated on “Giant Walking Eye” and so TWE was christened.

The chassis is a Dagu 4WD Wild Thumper bot chassis (ordered from Pololu) which I’ve extended a few inches. In hindsight, the 6WD chassis would have been better. Maybe for TWE2. The shell is foam PVC sheeting which is easy to cut for someone who lives in an apartment and doesn’t want to annoy her neighbors (more than she does already). Also, it’s very light and I need to keep everything that sits on the chassis under about 10 pounds. The chassis undercarriage has a power distro box with motor controllers, an emergency cut-off switch and two 7.2v batteries in parallel for the motors.

There’s a Mars Rover-esque platform that sits on a revolving turret on the chassis. On top of that is the brain box which also houses the front-facing arm. The arm is also made from .157 PVC and is powered by tandem servos (one reversed so that they lift in concert) for shoulder and elbow. The two large-scale shoulder servos are Hi-Tecs and the two in the elbow are some crazy Chinese servos I found on Amazon which have huge amounts of torque and metal gearing. The elbow will do most of the work by itself and the should is only needed when the arm needs to be extended. The wrist ends with a gripper which I bought from Parallax or RobotShop or somewhere. I’ll be using Phidgets or Pololu controllers for the servos (depending on which ‘network’ I end up using – more below).

The white PVC and angled cuts give it a sort of 70s sensibility and I’m OK with that. Plus, you should note that there’s nothing on the side yet and there will be. There’s only one ultrasound range finder right now, but I’ll be putting 5 more on (one on each angled corner and on in the rear) as well as some other goodies on the side. And in the rear will be a little boot to put the secondary batteries in (two 6v totaling ~ 9Ah for the processors and controllers).

Up top there’s a camera which pans and tilts and immediately behind that is a 7″ LCD display. The display will be hooked into the core micro-controller (probably a Parallax Propellor board) which may or may not also be hooked to an ultra-small PC (a Gumstix Fire or a Genesi Efica – both of which I’m playing with). This all depends on how I want the robot to be controlled. Without the PC, I’d be making it completely autonomous (maybe an Xbee for remote control or logging). With the PC, I’d be able to store and execute more complex code and also tie back via Wi-Fi to another computer where I could assume manual control if desired. I haven’t decided. Maybe I’ll try to make it do both. It all comes down to software and batteries.

Additionally, I need to decide how this will all be connected. Depending on which controllers and computers end up in side, it will either be primarily USB, Ethernet or a mixture of I²C and USB. I’ve mocked up both and there are benefits to each. We’ll see. USB is winning at the moment given that all the controllers already inside have USB ports and everything else could be wired to the micro-controller (which also has USB).

Then, I need to figure out how it recharges itself. That’ll involve building a charging circuit for the various battery systems and a station it can find on its own (probably using RFID triangulation).

ANYway… that’s the state of TWE. In case you were wondering. Which you totally were. Hope you enjoyed. Cuz… everybody needs a robot. For… ‘reconnaissance applications’.

UPDATE FROM THE FUTURE!

Sorry I didn’t update this more as TWE progressed. I moved to a real shop at the Artisan’s Asylum and worked on TWE in fits and bursts. I put in control systems for the drivetrain, arm and sensors. Played around with ROS and OpenQbo and whatnot. All in all, TWE was a fair success (there were things I could have done much better, admittedly) and, admit it, he’s adorable.

But then, alas, other projects got in the way and TWE ended up on a shelf. I eventually pulled out and loaned the drivetrain to Brandon from Rascal Micro for a while to do some demos on and I pulled a few things out here and there for other projects.

BUT! Don’t be sad. This week I took the drivetrain out and reprogrammed the controllers. I removed the arm from what’s left of TWE’s chassis (that arm turned out good, dude. Seriously.), the dual cameras, and am tinkering with a different idea now. It’s already got a name of course. Because I have to name everything. SHANE — I was running the drive train up and down the aisle at the Asylum using an RC controller and, joking to a fellow inmate, I called ‘Come back, Shane!’ And that’s how names are gotten. ;)

Anywho. TWE has left us, long live TWE. But something new to come. :)